10 Things That Can Make Your Page Faster

As a result they are loading longer than they did previously. Average webpage in 2018 weighted 1.8 MB and it loaded approximately 8.5 second whereas it is advised for the page to load under 3 seconds. This is the magic line after which, on average, more than a half of the users would leave the page.

But does it have to be like this? Nothing suggests that the pace of growth of the internet apps will stop, so are we doomed? Let’s check that!A baseline

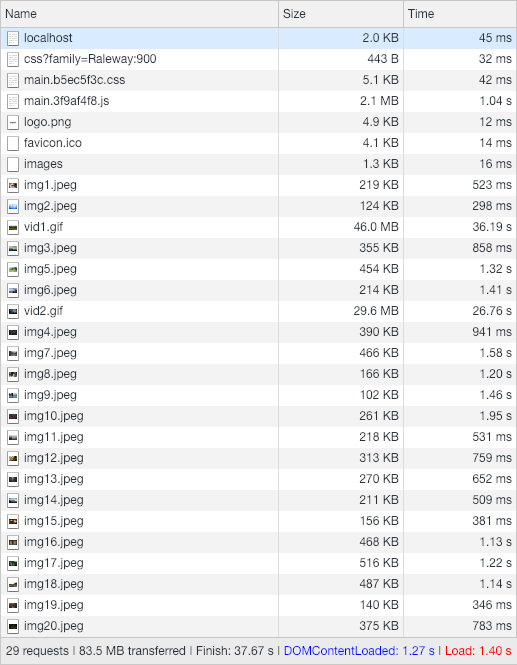

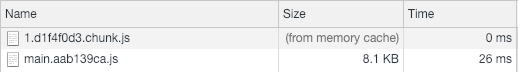

Suppose you were developing an app yourself. And it would be let’s say… an instagram for stock photography professionals. I don’t know why you would do this but let’s imagine. You have spent some time on it, you even came up with a name – ImageGuru. But it isn’t performing well enough. It’s not as high in Google ranking as you would hope it to be and a lot of people are leaving the page before it even loaded! You are devastated but would like to check why it is so. You open the devtools, reload the page and see this… 82 MB of data downloaded and it in the end took more than 30s to load everything. Oh shoot… Gotta do something with it then.

1. Minification

Ok so first suspicious thing is the size of JS file – 2.1 MB alone?! And sure thing you can easily spot the problem. Files aren’t minified. What does it mean? They are in their raw form, with all the whitespaces, newlines, comments etc. which obviously are important while developing (have you tried debugging minified code? If not I really encourage you, it is really great fun). So let’s add it.

When using webpack we can use the mode: 'production' to enable TerserPlugin (which is built into the webpack) that will take care of all the minification of our JS and CSS. If not there are many online tools that could help you with that – e.g. the JavaScript Minifier.

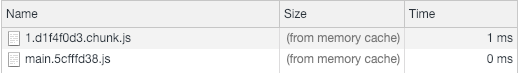

After only adding minification it got us from 2.1 MB to just 415 KB. That’s more than 80% of decrease. Nice start. But still we can work on that.

2. Code splitting

Another thing we can add is code splitting. You can once again wonder what that is. Basically we are going to split our app into smaller parts. Mainly we are going to separate vendors (code from external resources e.g. npm) from core code of our app. ‘But why?’ you may ask, ‘Isn’t making more requests bad?’ The answer to this question is ‘Yes and no’ but we will come back to this matter later.Now, however I’d like to explain why here we’d like to make at least 2 files. Caching is the key word here. Those 2 files I am talking about are:

- File with dependencies like react, vue or lodash – this one would change rarely, only when the dependencies are added or updated, so it would be nice to cache them.

- File with your code – it will change the most often among the files you download

Once again webpack comes to us with a helping hand.

{

optimization: {

// ...

splitChunks: {

chunks: 'all',

},

}

}

And that’s it! All we had to do, was just to add `splitChunks.chunks`.

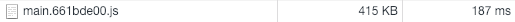

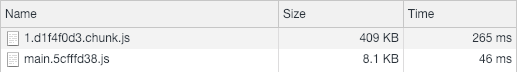

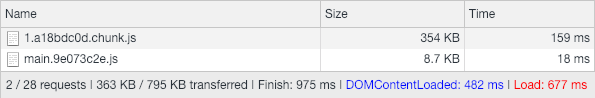

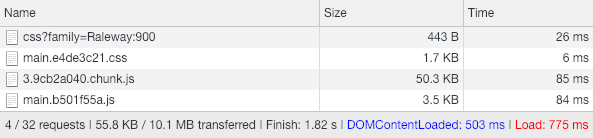

As you can see the bundle size increased a bit, so at first it may seem as the performance would be worse. But take a look what happens if we reload the page.

The files were not downloaded again from the server and were retrieved from the browser cache. But this behaviour would also happen if we didn’t split right? Right, but take another look at it after we have changed something in our code.

The new bundle is created, however as no dependencies were changed or added only the main file was recompiled and only that one has to be downloaded again (the one with our code). Thanks to that we have to download just 8 KB in 18 ms instead of the whole 417 KB.

3. Tree shaking

Another technique that bundling our app with tools like webpack allows us is tree shaking – not including unused code into the bundle. This one unfortunately will require from us (and the package we are using) some more work than just changing things in config. But bear with me, it’s not hard.In your app you are using lodash – a collection of useful utility functions. Using the plugin called webpack-bundle-analyzer we can inspect how does our bundle look like and what files take how much space.

You can clearly see here that lodash is taking up almost 16% of the whole bundle (69 KB to be precise) – this is how much the whole package weighs. However, we are actually using just one function from it, the get function. So there is no need to include the whole package.

You can clearly see here that lodash is taking up almost 16% of the whole bundle (69 KB to be precise) – this is how much the whole package weighs. However, we are actually using just one function from it, the get function. So there is no need to include the whole package.Currently our file using get looks like this.

import { getImages, getImageById } from '../api/images';

import { get } from 'lodash';

export const fetchImages = dispatch => async () => {

const images = get(await getImages(), 'data.images', []);

dispatch({

type: 'IMAGES/FETCH_ALL',

payload: images

});

}

// ...

On line 2 we are importing whole lodash and then taking the get function out of it. What we have to do is to import just the get file from lodash.

import { getImages, getImageById } from '../api/images';

import get from 'lodash/get';

export const fetchImages = dispatch => async () => {

const images = get(await getImages(), 'data.images', []);

dispatch({

type: 'IMAGES/FETCH_ALL',

payload: images

});

}

// ...

So after we did some optimisation for lodash and react-router-dom we came down from 409 KB to 354 KB and lodash became just 7 KB. How not to call it as some improvement.

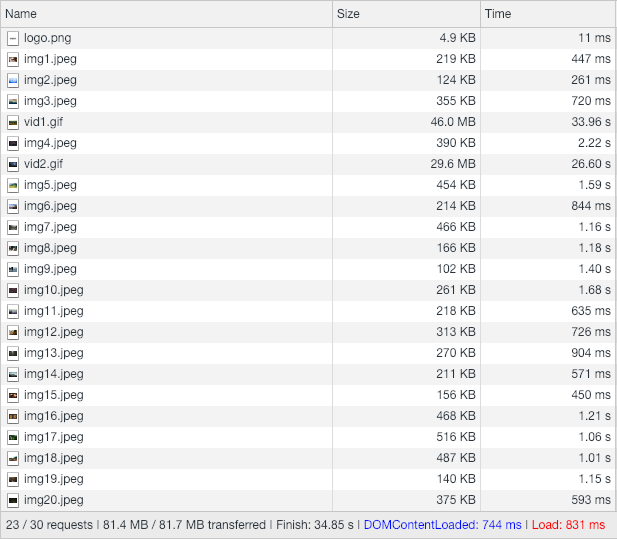

4. Asset optimisation

So far we have focused on JavaScript and CSS sizes but actually most of the data that was downloaded were images – staggering 81 MB (of which 75 MB are gifs) So I think that could be a little problematic.

GIF vs Video

Let’s start with those GIFs. This format this year will have exactly 30 years and we are still using it but we should stop! As you can see they can weigh enormously much – 46 MB for a 14 seconds video.Gifs are nice as we don’t have to worry while displaying it among images. Both normal images (jpegs, pngs) and GIFs are displayed using <img /> tag. But with videos you have to use <video /> tag. However, changing format to mp4 goes down to 7.5 MB. Quite a change.

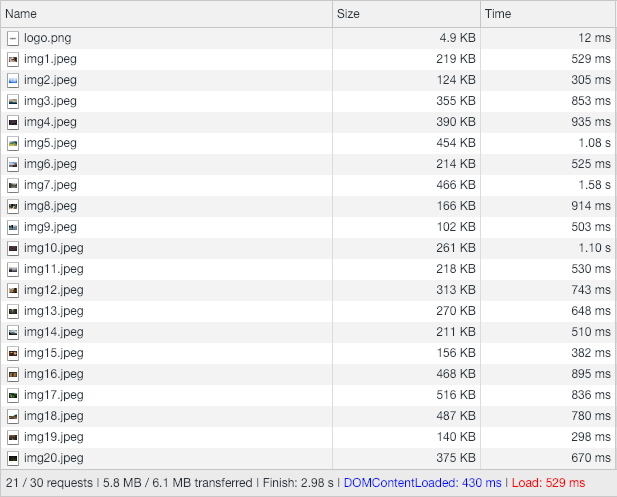

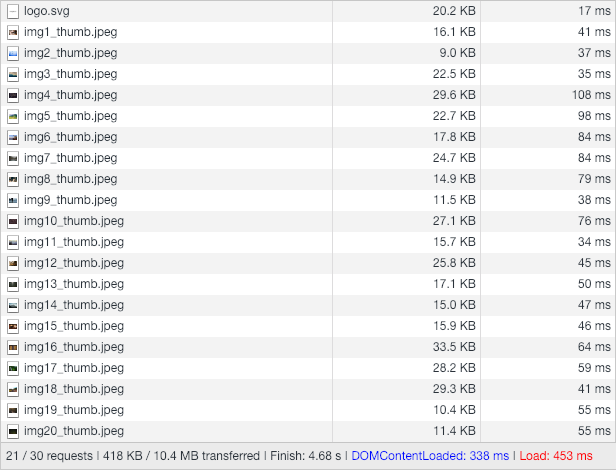

Image optimisation

Then we can move on to the rest of the assets. First thing that we should take a look at are the image sizes. Both images on thumbnails and in details view are exactly the same, so the size of them is 1920x1080 to look good when they are stretched to cover full length of the screen on desktop. Most of the time, however, all of those pixels won’t be used as nowadays more than a half of the internet traffic is from mobile devices. So what we can do, is to change the resolution of each image. To do this you could use cli tool like node-thumbnail cli or a webpack plugin (like thumbnail-webpack-plugin that relies on previous tool).

Images before optimisation

Good thing to do as well is to add some jpeg compression with imagemin. Similarly to previous tool we install it globally. This time however, we have to add plugins for appropriate formats.

npm install -g imagemin-cli imagemin-mozjpeg -g

imagemin public/images/* --out-dir public/images_opt --plugin=mozjpeg

Images after optimisation

And that’s it. From 5.8 MB to 418 KB to be downloaded on start.

tag

Our images are in proper sizes and they are also compressed so that they take up less space. But is there anything we could do to optimise more the details page? Of course it is – introducing the <picture> tag! This tag was specifically created for this reason. It allows us to define different images based on the screen size (and more since it works using media queries). Here you can see how this tag can be used.

<picture>

<source srcset={bigUrls.fullHd} media="(min-width: 720px)" />

<source srcset={bigUrls.hd} media="(min-width: 640px)" />

<source srcset={bigUrls.sd} />

<img src={bigUrls.fullHd} />

</picture>

Basically, we have an outer picture tag and inside of it we specify different sources for images. In this example if the screen size is bigger than 720 px the image in full hd will be displayed, if the screen is bigger than 640 px the 720p image will be displayed and otherwise the 480p one. The img tag at the end is a fallback for browsers that do not support this feature (IE for example).

This time there won’t be any difference for the desktop time but if we check on mobile. Oh boy, half the time! We’re rocking it!

Image size and loading time on desktop on «Fast 3G»

Image size and loading time on mobile on «Fast 3G»

Muting videos

We have started with videos, so let’s end on them. A common thing to do with videos on web is to mute them. In your app you display videos among the images. And as there could be many of them on one page you have to mute them. And what you do is you add a muted parameter to video tag and you’re happy. But not so quick! Do we need the audio to be downloaded with the video if we always mute it? Obviously not. By removing the audio track from video file we can save another 0.8 MB.

5. Prefetching

Another way to speed up the user’s experience on our page could be prefetching the data. Up to this point, we mainly focused on the first screen of the application but there is one more thing we can do in this prototype – i.e. we can see the description and comments of each image. Currently when you enter the image, comments and description are downloaded so when you click on an image you have to wait a second until the data is downloaded. What we can do to speed it up is to start downloading the data before the user even clicks on the image – e.g. on hover. The best example of this behaviour can be seen on the pages created by GatsbyJS where each blog post is downloaded on link hover. It adds that extra snappiness to the page.

An example of prefetching of that on Dan Abramov’s blog

6. Dynamic imports

Dynamic imports are currently in stage 3 of the TC39 so they are almost ready to be included in newest ECMAScript version. However thanks to the tools like webpack we can use them right now. What they allow us to do is to asynchronously load parts of our code and it can be done on demand, so we can trigger downloading that data only when it is needed. One use case would be an admin panel that would load only for logged in admin user. And fortunately your app has this functionality. You can change description of the image.

Admin panel

You can see we have a nice form with material design inputs. This input is from an external package that is used only in admin panel. So that it would be a nice idea to load it only when editing.

But first, we have to set it up. Again webpack to the rescue! Adding the chunkFilename enables us this feature.

{

output: {

filename: '[name].bundle.js',

chunkFilename: '[name].bundle.js',

// ...

},

}

Now when we use dynamic import with import('file.js') code that is inside file will be extracted to separate bundle and will be downloaded only when the import function is finally called.

As mentioned previously the import is asynchronous so you can expect it to return the promise.

With React, components could only be functions or classes so a promise isn’t going to work. However, we can easily implement loading components with lazy. All we have to do to load them asynchronously is to instead of importing it normally, with import at the top of the file, we assign the result of dynamic import wrapped in lazy to a variable. Then, when rendering we have to wrap our component with Suspense to add a fallback before the component is loaded.

import React, { Suspense, lazy } from 'react';

const Component = lazy(() => import('./Component/Component'));

export default () => (

<Suspense fallback={(<div>Loading...</div>)}>

<Component />

</Suspense>

);

Obviously it is not only bound to React. Here is a nice article about code splitting in Vue.js Code Splitting With Vue.js And Webpack - Vue.js Developers And of course you can use dynamic imports in vanilla JS.

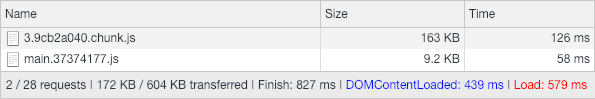

Initial bundle size before using dynamic import…

…and after

7. Service workers

Progressive Web Apps are growing in popularity recently. And I can’t be surprised, they are great. They can bring a native-like experience to the web thanks to push notifications, background sync and ability to work offline. And most of that is achieved by Service worker sitting under the hood. What we can do is to use it for speeding up our app.

We’ll use just one of many features that service workers give us – cache.

But this one is different from the one that caches our files by default. Apart from loading files that have been already downloaded you can cache files on demand and even you can cache requests. And all of this will be available offline! So even if you have no internet it will work. And all with relatively low cost.

I won’t cover it here though, as it is a topic for another full article. If you use create-react-app, it has a simple service worker setup built in. All you have to do is to follow instructions in src/index.js, namely change the function called from unregister to register.

If you would like to read more about them here is an introduction from Google developers – Service Workers: an Introduction | Web Fundamentals. We also have a few different articles focusing on PWAs. You can read them here – Progressive Web Apps.

Ok we have done a lot of work on the frontend side to speed up our page. Let’s move to the configuration of the server serving our page.

8. gzip

First thing we’ll cover here is gzip. You must have heard about regular zip files. These are archives that losslessly compress data to make them occupy less disk space. That sounds great and it would be nice to use it on the web, considering that load times are quite important. Unfortunately no browser supports zips, they do however support gzip. And that’s great! Again here is current amount of data downloaded from the server.

And here is after enabling gzip

70% less! That’s a huge amount, and all of it after our previous optimisations. And setting it up is a piece of cake. For nginx servers all you have to do is to set gzip to on in your nginx.conf under the server section. Obviously there are several additional config possibilities to it so this is how my config looks like at the end.

server {

# ...

gzip on;

gzip_vary on;

gzip_proxied any;

gzip_comp_level 6;

gzip_buffers 16 8k;

gzip_http_version 1.1;

gzip_min_length 256;

gzip_types text/plain text/css application/json application/javascript;

# ...

}

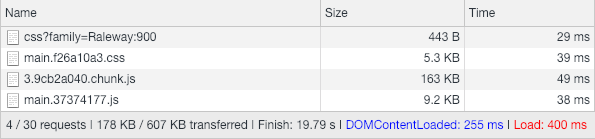

9. HTTP/2

Do you remember when I said that making more than none request is not that bad? This is the reason. HTTP/2 is an extension of the HTTP protocol created in the early 90s. It was created to address multiple issues with the older one but the main thing that will interest us here is that it implements multiplexed streams. These, in short, allow us to transmit multiple parallel requests from server to the client.

One caveat of this is the need of using ssl certificate on the webpage. However this shouldn’t be an issue as those should be a standard now especially thanks to organisations like Let’s Encrypt that can supply you with free certificates.

And enabling it is really easy, especially if you already have a https enabled.

server {

listen 443 ssl http2;

# ...

}

And that’s it. It should now work on HTTP/2!

As you can see all of the data now is being downloaded almost at once, concurrently. With HTTP/1.1 we had to wait for previous file to finish downloading before the next one would start.

If you’d like to know a bit more about HTTP/2 please take a look at this great article from Kinsta What is HTTP/2 - The Ultimate Guide by Kinsta.

10. CDN

Last thing to consider would be using the CDN – Content Delivery Network. This basically is a grid of servers scattered around the world that cache your static files. Let’s suppose you have your server in Europe and the user is from USA. When they query the server the request every time has to reach Europe, which is quite a distance, isn’t it? (Considering perfect conditions all that speed of light allows us is is 26 ms one way). With the help of CDN that request would be cached on a server in Europe, Asia, or anywhere else and then just served from the closest one. Thanks to that user’s request doesn’t have to make the trip to USA, and only the CDN’s servers would check the state of that file once in a while.

Setting all of this up is also quite easy. Let’s take Cloudflare as an example. To set up their service you have to create an account on their page and then redirect your DNS servers to theirs. And that’s all. And you get HTTPS and other features in a package.

To learn more about CDNs and how do they work I encourage you to read this article from Cloudflare – Cloudflare

Endnote

Well I think we are not doomed. You can see that making this page faster is not that hard, we came from 34 seconds down to just 480 ms. It is 98% less! And if we count out the GIFs that took 75 MB it’s still 93% improvement. And most of the things were seemingly small and easy but the outcome is staggering. To finish our work we’ll run an Audit tool built into the Chrome devtools and let the numbers speak by themselves.

Before

And after

Photo by Marc-Olivier Jodoin on Unsplash