How to Build Scalable Cloud Infrastructure?

From security and reliability to automation, scalable cloud computing has many advantages – we delve into them and more in this informative post.

When should you think about scalable cloud computing? Right from the outset, when you’re architecting your cloud computing solution. Why?

If a scalable cloud isn’t implemented right from the start, you run the risk of needing to redesign your existing infrastructure in the future as your business grows. Fortunately, taking care of cloud scaling and preparing for future expansion doesn’t necessarily mean huge costs and a lot of time.

In this article, we explore the intricacies of scalable cloud computing: types of scaling, building scalable cloud architecture to achieve cloud scalability, and AWS services you can use to build a scalable business model. Read on for the lowdown.

What is cloud scalability?

Cloud computing scalability is the ability of cloud-hosted environments to increase or decrease their ‘capacity’ in response to changing loads. The benefits of cloud scalability include convenience, flexibility, cost savings, and improved disaster recovery. In other words, a scalable solution adjusts itself appropriately to handle increased traffic without overprovisioning, while making cost savings during smaller than usual loads.

Definition and Importance of Scalability

Scalability in cloud computing refers to the ability of a cloud infrastructure to scale up or down resources to meet changing workload demands. This flexibility allows businesses to easily add or remove computing resources as needed, without the need for significant hardware investment or major infrastructure changes. Scalability is a crucial aspect of cloud computing, enabling organizations to quickly adapt to fluctuating demands and ensure optimal performance and customer satisfaction. By leveraging scalable cloud infrastructure, businesses can maintain high levels of service availability and responsiveness, which are essential for staying competitive in today’s fast-paced digital landscape.

Benefits of Scalability

The benefits of scalability in cloud computing are numerous and impactful for businesses of all sizes.

Firstly, scalability allows organizations to quickly adapt to changing demands, ensuring that their applications and services remain responsive and performant even during traffic spikes. This adaptability leads to optimal performance and enhanced customer satisfaction, as users experience minimal downtime and fast response times.

Cost savings are another significant benefit of scalability in cloud computing. By only paying for the resources that are actually needed, businesses can avoid the expenses associated with overprovisioning. This pay-as-you-go model ensures that companies can manage their budgets more effectively and allocate resources where they are most needed.

Moreover, scalability enables businesses to scale globally and reach customers in different regions without the need for physical infrastructure in each location. This global reach is facilitated by cloud providers who offer scalable solutions that can be deployed across multiple geographic regions, ensuring consistent performance and availability for users worldwide.

In summary, the benefits of scalability in cloud computing include the ability to quickly adapt to changing demands, cost savings through efficient resource utilization, and the capability to scale globally, all of which contribute to improved performance and customer satisfaction.

Cloud elasticity vs. cloud scalability

Cloud elasticity is the ability of a cloud environment to match the required resource allocation according to workload. Elasticity is a property of the cloud that helps you reduce spending on cloud infrastructure by fulfilling demands without overprovisioning resources.

Cloud scalability on the other hand is the ability to increase or decrease resource allocation in response to variable workloads. It’s a property of the cloud environment that helps you prevent your system’s performance from dropping even though loads may grow, ensuring a scalable cloud environment.

Types of scalability in cloud computing

Scaling in cloud computing is essentially changing the ability of resources to handle traffic, and there are different ways to approach it. There are several types of scaling: vertical, horizontal, and diagonal.

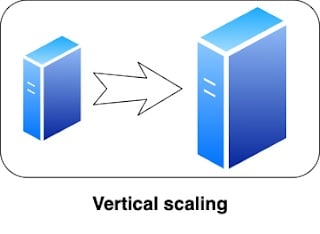

Vertical scaling

Vertical scaling is also referred to as scaling up or down. It’s the most basic type of scaling and should work right away with any kind of application. It’s about increasing instance resource allocation (i.e. CPU or RAM) and ensuring sufficient processing power without changes to your code or app.

By adding more resources to instances hosting your application, you basically increase its ability to serve content to a larger group of users.

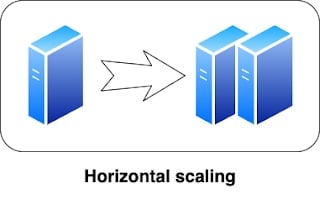

Horizontal scaling

When you think of scaling in or out, that's horizontal scaling. This one's about increasing the number of instances working in parallel, while keeping single instance resource allocation unaltered.

Diagonal scaling

Diagonal scaling is a combination of vertical and horizontal scaling. While your instances are scaled out to handle more traffic, they can be scaled up at the same time. Additionally, you can configure settings to trigger automatic scaling based on usage thresholds, ensuring optimal resource management and preventing performance degradation.

This one’s useful when a single process on one instance begins to consume most of its allocated resources – that instance is scaled up and the rest of the traffic is served by other, smaller units spawned by scaling out.

Building scalable cloud architecture

It’s important to think about scalable architecture right from the start of the infrastructure designing process. Why? Scalable cloud computing has multiple advantages for businesses of all sizes.

First of all, scalable infrastructure is cost efficient, because you’re not paying for resources you don’t use. Using highly-scalable cloud services, you’re able to build reliable solutions that are resilient to workload spikes.

Also, because cloud providers emphasize infrastructure security, cloud services are packed with security features. What’s more, postponing the implementation of scalability to your infrastructure is a risky play. Why?

Imagine your product launch is a huge success and your application user base is growing unexpectedly fast. In turn, your infrastructure starts to get overloaded and you have to take the app down and redesign your environment. Complex situations like these have the potential to lose your business customers and money.

So, what is a good way to approach building your scalable cloud architecture?

Designing for Scalability

Designing for scalability is essential to ensure that a cloud infrastructure can handle increased workload demands without compromising performance. This involves planning and implementing a scalable architecture that can adapt to changing demands. By considering scalability from the outset, businesses can build a robust cloud infrastructure that supports growth and ensures consistent performance.

Modularity

Everything starts with your application, so we recommend a modular design. Microservices (a modular approach) break complex components down into less complicated smaller parts that are easier to scale, secure, and manage than monolith applications.

Containerization

If your app is modular, containerize it. By leveraging the capabilities of third party cloud providers, you can ensure that your application has the necessary infrastructure and resources to scale efficiently. For example, using tools like Docker or Kubernetes to run your application enables you to take care of cloud scalability from a single control panel. We also advise that you make your application stateless and keep your app image as lightweight as possible. It makes scaling easier, faster and imperceptible.

Designing for Scalability from the Start

Designing for scalability from the start involves considering the potential growth of an organization and planning the cloud infrastructure accordingly. This includes choosing a cloud provider that offers scalable solutions, implementing a scalable architecture that can adapt to changing demands, and using auto-scaling and load balancing to ensure optimal performance. By taking these steps, businesses can create a cloud infrastructure that is prepared for future growth and can handle increased workload demands without compromising performance.

Using Auto-Scaling and Load Balancing

Auto-scaling and load balancing are essential tools for achieving scalability in cloud computing. Auto-scaling allows organizations to automatically add or remove computing resources based on workload demands, ensuring that the right amount of resources is always available. This helps to prevent overprovisioning and reduces costs by only paying for the needed resources.

Load balancing, on the other hand, ensures that incoming traffic is distributed evenly across multiple servers, preventing any single server from becoming overloaded. This not only improves performance but also enhances the reliability and availability of applications and services.

By using auto-scaling and load balancing, organizations can ensure that their cloud infrastructure is scalable and can handle increased workload demands without compromising performance. This enables organizations to quickly adapt to changing demands, improve performance and customer satisfaction, and reduce costs by efficiently managing computing resources.

Overall, designing for scalability and using auto-scaling and load balancing are essential for achieving scalability in cloud computing and ensuring that organizations can quickly adapt to changing demands and ensure optimal performance and customer satisfaction.

Reliability

In most cases, cloud services are highly available by default and offer cross-region replication. By using those services, there's minimal downtime and you make your application more reliable and resilient. After all, if you lose availability, you'll most likely lose trust and wave goodbye to some customers, meaning lost money too.

Monitoring

This is an important aspect for automation, enabling you to understand what can and should be automated, thereby minimizing human error while increasing consistency and speed. Additionally, monitoring infrastructure parameters helps manage data storage capacity, ensuring that your system can efficiently scale to meet growing or fluctuating demands. Moreover, by monitoring infrastructure parameters and creating alarms for abnormal states, you’re able to make data-driven scaling decisions.

Automation

Autoscaling makes life easier, allowing you to scale resources in response to increased traffic without the involvement of engineers. Auto-scaling is based on monitored metrics and is a handy way to manage costs – you only use as much server power as you need, when you need it. There's also continuous integration and continuous delivery (CICD) to consider, meaning faster and automated application deployment.

Security

Security is vital: if you're unable to protect your services, your business suffers. To that end, it's imperative to think about security as part of the initial design. Cloud services have many built-in security features. For example, cloud computing vendors like AWS that take data security seriously provide identity access management (IAM), security groups, and CloudTrail.

AWS services to build scalable computing environments

Scalability is one of main advantages cloud environments offer over on-premise ones. Cloud solutions eliminate the need for physical hardware, allowing businesses to quickly scale applications and services without the lengthy setup associated with traditional equipment. There are a bunch of cloud-native services that provide high scalability and reliability that you can use out-of-the-box, including:

-

ASG (Auto Scaling Group) – This is basically a group of EC2 virtual machines, and the simplest way of providing scalability to your application. Just prepare a machine image (AMI) with your application, and then the configuration of the scaling policies allows you to scale the environment using your own custom CloudWatch alarms or predefined metrics such as CPU or network utilization.

-

ECS (Elastic Container Service) – Here you have a fully managed container orchestration service that makes it easy to deploy, manage and scale containerized applications. It automatically takes care of scaling your application containers as well as the infrastructure underneath.

-

EKS (Elastic Kubernetes Service) – This managed Kubernetes service makes it easy for you to run Kubernetes on AWS and on-premise. It also helps you build your Kubernetes cluster.

-

S3 (Simple Storage Service) – This highly available scalable storage service is used to store files and provides a variety of tiers for access frequency and storage reliability. It’s implemented via a pay-as-you-go model, so data storage never is overprovisioned.

-

RDS (Relational Database Service) – A managed service that allows you to set up, operate and scale relational databases using the most popular engines such as MySQL and PostgreSQL). It’s easy to scale up a database instance, and RDS also provides you with the ability to scale allocated storage dynamically as consumed space increases. Additionally, there’s the possibility to scale out databases with reader or writer replicas.

-

CloudWatch – This built-in service for monitoring, collecting metrics, and logs gives you the ability to create custom alerts on monitored indicators that can be used to trigger scaling actions.

Cost Optimization Strategies in Cloud Scalability

One of the key benefits of scalable cloud architecture is its potential for cost efficiency, but leveraging this requires a strategic approach. Businesses can optimize their cloud costs by selecting the right pricing models, such as Reserved Instances and Spot Instances on AWS. Efficiently managing increased storage capacity alongside processing power and networking resources is crucial to accommodate unpredictable loads and achieve performance improvements.

Reserved Instances allow companies to commit to cloud resources at discounted rates, ideal for predictable workloads. On the other hand, Spot Instances offer significant cost savings for flexible workloads, where resources can be scaled down without impacting the business. By dynamically adjusting cloud resources in response to traffic and workload changes, companies can minimize unnecessary expenses and avoid overprovisioning.

Additionally, the pay-as-you-go model further enhances cost control by charging only for resources used. However, companies need to carefully balance this with the risk of under-provisioning, which can lead to performance bottlenecks during traffic spikes. Implementing a mix of these strategies ensures that businesses can achieve optimal performance while keeping cloud spending under control.

Scale the architecture according to business needs

Scalable cloud computing is all about preparing your infrastructure in the best possible way, right from the outset. By designing and implementing a scalable cloud solution from the start, you're all set for the future in terms of growth (or contraction).

Building scalable cloud architecture doesn't have to be time-consuming or overly expensive, and it comes with many pros including security and monitoring features. Indeed, there are several out-of-the-box AWS services that are worth considering, such as ASG and ECS.

Whichever suits your business needs the best, there's one main advantage across all cloud-native services: the ability to increase or decrease capacity in response to changing loads.