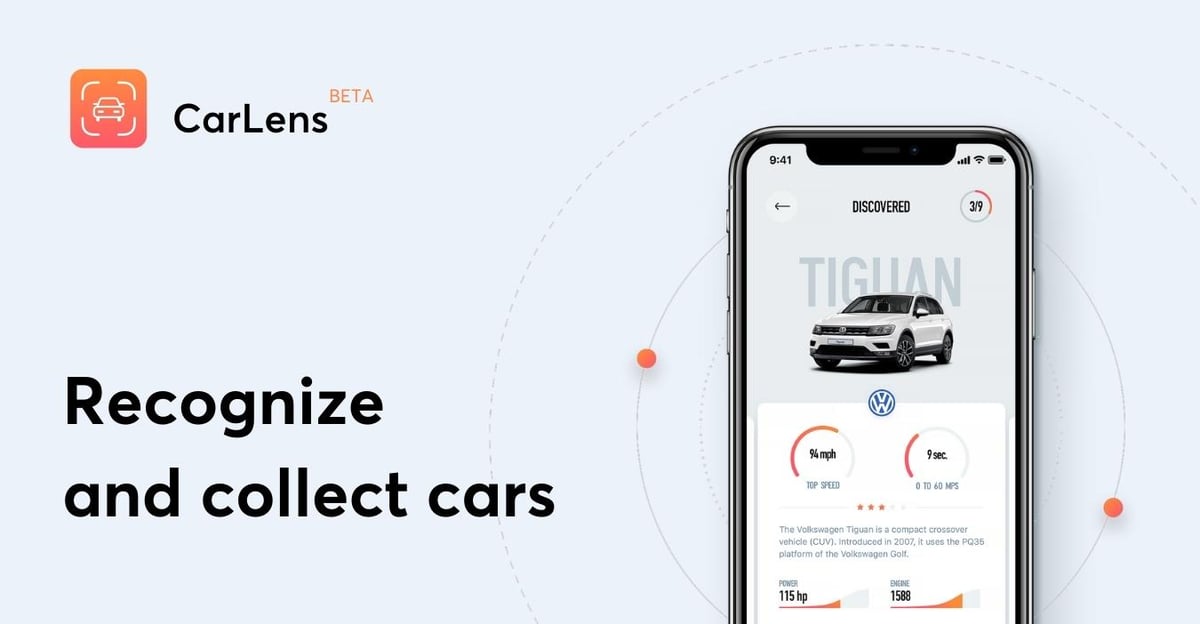

Machine Learning and Augmented Reality Combined in One Sleek Mobile App – How We Built CarLens

That's our recipe for building a precise algorithm that will detect 5 car models using your smartphone’s camera. Here's more on our journey to create a fun tool with cutting-edge solutions.

What’s behind our idea

Do you remember when you were a kid and you were walking around the street guessing brands and models of cars? In Netguru’s mobile team we had exactly the same memories, and we’ve decided to give it a try and start an experimental project that would help us identify cars using our smartphones. We believe that learning and researching new technologies works the best when you experience them in real-life projects.

That’s why it felt good to try Machine Learning with Tensorflow for image detection, and Augmented Realityengines for photographing cars on the streets. The beginnings were hard (as always), especially when we’ve discovered that 100 images per car brand is not enough to build a working model, but during the last 1,5 months our mobile and product design team did an amazing job. Now we want to share with you how the process went and what we’ve learned during this really interesting R&D project.

Machine Learning

Machine learning is extremely popular nowadays. It is everywhere. Even if you don’t notice it – you definitely use it even in your everyday life. Checking your email with a clean inbox folder without spam, asking Siri about the weather today, or using the dog filter on Instagram (I know that Snapchat was first, but Instagram has beaten it a long time ago, right? 😜). It all started on a computer, but Machine Learning on mobile is a whole new era. We see it. And we couldn’t miss a chance to test it in the battle ourselves.

Machine Learning model development - our journey

Let us briefly describe the Machine Learning process. First of all, it is crucial to define the problem. Then you need to prepare all the relevant data and chose the best algorithm for you. Train your model on that data. Then test it on the test dataset, which is different from the training one. Evaluate the model and improve it if needed. This is it. When we said we were going to describe it briefly, we meant it!

Our problem

In our case, the problem was car recognition. We needed to specify exactly which models of cars we want to recognize. From the logical point of view, it was clear that we need to choose models which are the most frequently seen on the streets. Assuming that the application will be used mostly in Europe, we’ve chosen the 9 most popular models: 3 from Poland, 3 from Germany, and 3 from the UK.

Data Preparation

Data is one of the most important things in the Machine Learning process. It should never be underestimated. Very often it is even more important than the algorithms you choose.

We started with scraping, which in plain English means that we fed our model 40,000 different photos.To train a neural network you need data. A lot of it. The first thing we did was to use the Stanford Cars dataset. It has 16185 images in 196 classes, which makes roughly 82 photos per model. This was not enough. We then thought that we might use Google search for images to download the data. So we opened a browser, searched for the chosen car models, selected the ones with a free license, and started downloading. But we would not be programers if we did it manually. We used Python, and with a little help from a Selenium script [https://github.com/hardikvasa/google-images-download] we quickly had around 500 photos per car model. That was better, but still not enough. Our next idea was to play a little game called “car spotting”. Geared up with cameras, we went to the city to find the models we needed. We shot several movies of a couple of minutes length of every model, then we extracted every 10th frame from the movie to get a nice number of around 2000 photos in total per car model. The whole process of collecting data took us much more time than expected, and we decided to focus on 5 cars instead of 9.

Neural Networks used in our project

We have tried several types of networks in the project, including MobilenetV1, MobilenetV2 and InceptionV3. These are ready-made networks, so we did not have to create them from scratch. We decided to use transfer learning as our training method. This technique saves a lot work by taking a part of model that has been already trained on specific task and reusing it in the new model. It significantly shortens the learning time of the model because it works well with less amount of data. Instead of millions labeled images and weeks of training on several graphic cards, it requires a thousands images and few hours on an average ultrabook CPU. Because of the large model size of InceptionV3, we decided to focus on Mobilenet networks, which are optimized for mobile devices.

Mobile development with Machine Learning

At the beginning, Tensorflow seems very difficult and extensive. And it is, but it also provides a lot of tools and interfaces that do a lot for the user. Also, the TF team as well as the community provide lots of useful articles, so even a beginner who only understands the basics of neural networks is able to teach a model for a specific task.

Tensorflow is awesome! The biggest advantage of Tensorflow is its easy integration with mobile applications on both Android and iOS. The small size of the model and library also allow you to predict things locally on the smartphone, making it fully independent from the the internet, which means that you can, for example, enjoy the full functionality of the application even on vacation abroad.

Testing performance of the model

Let's talk about speed and performance. We have compared two versions of Tensorflow, the normal one and Tensorflow Lite. It takes around 150 ms to classify one frame (224/224px) with Tensorflow Lite and around 200 ms with normal Tensorflow. Also, normal Tensorflow works on non-quantized data, so the results should be slightly better. All tests were performed on a Samsung Galaxy S9.

How to check if our model is good enough? The first thing we did was field testing. We simply went to our office parking lot and tested the application on cars that were there. Based on that we have improved our model by adding classes like “not a car”, which indicated that we do not have a car in view, and “other car”, which was default the class for car models out of our scope.

We have also implemented a testing technique called a “confusion matrix”. It sounds scary, but is very simple. To do that, we needed to prepare test data, which contained car photos grouped by class. Then we ran our model on all the photos and saved the result of classification. Based on that result we created a matrix that has shown which class performs the worst. According to this we could get more data for the worst performing class and retrain the model. Rinse and repeat.

Improving the model

After the testing phase, we suddenly understood that we needed to specify the problem more precisely. What years of production are we going to recognise? The same car model from 2008 and 2018 can look extremely different! From which sides of the car should recognition be possible? We have a lot of data for the fronts of the cars, and very little for other perspectives.

So, the training data was narrowed. But not in terms of quantity, only in terms of quality. We decided to:

-

Use the data for the newest models of cars, with a 2017 or 2018 year of production

-

Cut off models which are extremely similar to each other and are hard for a model to differentiate

-

Recognise only the front & sides of the car

Augmented Reality

Since we did not have any restrictions with regards to technology, we decided to choose the latest and greatest possible AR solution. We went with AR Core coupled with the Sceneform SDK from Google. Sceneform can draw just about any Android view in 3D and AR. This includes Lottie animations!

Usually when using an AR framework you need to first find flat a plane to put objects on. In our use case that was a blocker, because a car is not exactly flat. Fortunately, AR core supports hit tests. This is a method of detecting 3D points based on the mapped environment and the camera’s position. In other words, AR Core shoots beam in front of camera and returns the point that is the closest to it. That way we could determine where the car is located. Since we didn’t want to put labels directly onto the image at this point, we have estimated the average car size and put our label in the middle of this estimated size, 1 meter above the ground.

There are two downsides of using AR Core. The first one is that only handful of devices support it, and the second one is that it makes your phone super hot. But if it is not a problem for you, then we definitely recommend using it!

Mobile Technologies

iOS

We couldn’t be more excited to develop the the iOS CarLens app, as we could get out hands on the brand new frameworks introduced by Apple in 2017 - Core ML and ARKit.

Core ML

Core ML is a framework which allows you to integrate Machine Learning models into iOS apps. However, the model received from Tensorflow should first be converted to the .mlmodel extension. To do so, the tfcoreml tool was used. Then, with the help of the amazing Core ML framework, it was possible to easily predict car models based on the data received from the iOS app.

ARKit

ARKit is a framework which enables Augmented Reality experiences in applications. In our case, each car received a label above, indicating the model of the recognized car. The configuration of ARSession was pretty straightforward, as it came down to implementing delegate methods where we checked if the label can be presented above the car, and setting up ARWorldTrackingConfiguration with proper plane detection. What’s interesting, the implementation of the Augmented Reality part was easier on iOS than on Android. Our iOS team managed to make these changes way faster.

App architecture

Model-View-Controller (MVC) was chosen as architectural pattern as the application was not very huge and it allowed us to do so. At the same time, some nested view controllers are presented in the app, especially on the main recognition screen.There is no internet connectivity needed, and the cars’ parameters are represented in a local JSON file, which is the same on both platforms: iOS and Android. CarLens has a beautiful UI (thanks to our designers!) and animations. We used Lottie, a framework created by Airbnb, and custom animations to achieve that kind of interaction. The animated car list screens were implemented with the help of a custom CollectionViewFlowLayout. Thanks to this approach we didn’t clutter the View Controller with bunch of delegate methods responsible for the layout. We think that our pragmatic approach worked very well in this situation. We didn’t encounter any problem with the size of View Controllers, which is the main problem of the MVC architecture on iOS. When the View Controller started to grow, we just added another nested one or moved some of the logic to separate classes.

We also added unit and UI tests to the application using the XCTest framework. That also enabled us to see where our code could be improved. The iOS team wasn’t constant all the time. New people joined the project; however, onboarding was very easy for them. Rarely was there a need to talk to the person who implemented something but is not working on a project anymore. We believe our really well-documented code made this possible.

Android

AR

AR Core takes care of all the math stuff developers normally don’t want to care about, such as plane recognition and keeping track of the surrounding environment and camera position, and exposes a nice API to work with. To make it even easier, Google created the Sceneform SDK. With it you can easily import 3D objects and put them in an AR space, as well as render normal Android views in it. It even supports rendering Lottie animations! With it we could create our car sticker using a normal Android view and put it above the car. That makes working with designers very easy, since we are using the same tools as with application views.

Tensorflow and TensorFlow Lite

In the first versions of the app we used Tensorflow Lite as the Machine Learning engine. But we had some problems with converting the normal Tensorflow model into it. Also, the results from it and the iOS version were slightly different. So we decided to integrate the full blown Tensorflow. Since the model in it is not quantized, the results were now mostly the same as in the iOS app. Also, the model is not much bigger, and we could skip the converting models step, which increased our deployment speed.

App architecture

To make it work we have used the battle-tested MVP architecture with a little help from the Mosby library [https://github.com/sockeqwe/mosby]. Most of the stuff happens in the main activity, so we split some logic into custom views. Background processing (transforming bitmaps, Tensorflow classification) was done using RxJava. With that approach we could easily unit test most of the logic.

We used renderscript to convert Camera2 image (yuv420888) to a bitmap. That way our bitmap processing was blazing fast.

Lessons Learned

Now, looking back on those 2 months, we are happy with the work we’ve done. We faced new challenges and succeeded! Of course, not everything went smoothly, just like in any other project. Working on mobile applications on both platforms, iOS and Android, enabled us to see the difference between them. Some things were so much easier to implement on one platform than on the other one, and vice versa. For example, during implementing the AR functionality only one iOS Developer was working on it at the time, while two Senior Developers were working on that issue on Android. Both teams managed to deliver that at the same time.

However, if you look at the Lottie animation implementation, you’d be surprised. We guess you figured out already what we are going to say, but anyway: it was implemented faster on Android ! It was faster because of one simple reason – it turned out that you can’t add Lottie to ARKit views. We couldn’t animate the pins over the car on iOS. So, the team needed to do that using the native Apple framework. Overall, it was much easier to implement the Augmented Reality functionality and Machine Learning logic on iOS than on Android. We really can call the ARKit and CoreML frameworks amazing!

On the other side, if you ask us what was the main lesson we’ve learned regarding Machine Learning, it’ll be easy to answer – the quality of training and testing data is crucial. The quality and the quantity. We still didn’t manage to create an ideal model, which we would be 100% satisfied with. So, building a Machine Learning model by ML non-experts is possible; however, it is not guaranteed that you’ll obtain a result which you’ll be happy with.

The article was written by: Krzysztof Jackowski, Michał Kwiecień, Anna-Maria Shkarlinska, Marcin Stramowski, Paweł Bocheński, Michał Warchał, Bartosz Kucharski, Szymon Nitecki.

.jpg?width=1200&height=630&name=Virtual%20try-ons%20%E2%80%93%20a%20fancy%20technology%20or%20a%20key%20to%20successful%20sales%20%20(1).jpg)