Create complex and innovative applications with Python development services

Leverage the power of one of the fastest-growing technologies and build cutting-edge apps fast

Grow your business with a leading Python development company

Developing a successful digital product is a complex process that involves addressing the needs of your business and users, applying the right tech solutions, and creating a secure and stable app that provides exceptional user experience.

It requires choosing the right development company, using innovative solutions, and following a proven development process.

Achieve your goals by applying the right solutions

-

Transform your idea into a product. Let the right technology solutions help you launch your web or mobile app successfully

-

Innovate and stand out in a digital world. Respond to rapidly changing market needs and stay two steps ahead of your competitors

-

Turn interest into engagement and loyalty. Develop an innovative, functional, fast, and reliable web or mobile app that builds loyalty and trust with your users

-

Create a secure and stable product. Make sure you develop your app in line with the latest security standards. Avoid data leaks and reputational risks

Fortuna ai leveraged the power of Python to introduce innovation to the work of sales representatives

The tool helps companies find their ideal clients and automate the top half of the sales funnel, in particular, finding clients and then figuring out if they're qualified for the business or not. Choosing the right technology solutions enabled Fortuna.ai to accelerate their sales cycles significantly.

-

Salespeople are spending 80 percent of their time doing low-value activities. We automate the entire process so they can spend more time being account managers and concentrate on sales rather than the mundane churn.

Asad Naeem

Co-founder and President at Fortuna.ai

Why does Python stand out in the digital world?

-

Chosen by startups and multi-billion-dollar corporations. Python is the perfect choice for companies that work under time pressure and also corporations that build very complex apps that have to fulfill the highest security standards.

-

A powerful tool for data science. Python is used in data science, e.g., for artificial intelligence or machine learning solutions. It performs better in data manipulation and automating repetitive tasks than other technologies.

-

Exceptionally productive. Python is easy to write, read, and learn. That makes it one of the most loved technologies among developers – it enables them to build flawless products efficiently.

-

Created and used by a vibrant community. Python is one of the most widespread coding languages today. It is supported by a big community of developers who create tools and share their knowledge.

-

Versatile and efficient. Python is renowned for being one of the most versatile software development technologies if you have a product with a set of functionalities in mind. You can build any functionality fast.

-

Rich in ready-to-use solutions. Python has a large and vibrant community that has created thousands of open source libraries. If you want to build something with Python, you will likely be able to build it from ready-to-use solutions.

-

Easy to integrate. Python is called a "glue language" since it is so easy to integrate with other components such as other languages, frameworks, external services, and existing infrastructure elements.

-

Battle-tested, stable, and secure. Python is a secure technology, making it an excellent choice for financial applications dealing with sensitive data. It is a stable and trusted technology, well-founded on the technology market.

Take advantage of proven processes and make your project a success

A good Python development company will be able to take care of even the most complex of processes and will guide you smoothly towards your goal.

At Netguru, our software development processes are based on industry best practices and years of experience, so you can be sure that your project is in good hands.

-

Find a partner. Select the right Python development company for your project

-

Develop your idea. Brainstorm and analyze together with your web development consultants

-

Choose a technology. Make a decision about the tech stack and overall approach with your team

-

Design your solution. Create a beautiful, user-friendly web or mobile app or design a big data solution

-

Begin development. Watch as your expert Python development team brings your app to life

-

Go to market. Get your custom solution into the hands of your customers and grow your business

Why should you build a product in Python? A short guide to using Python for web development

Contents

Python is a free, open source programming language that has been used in development services for nearly 30 years, and it is still considered one of the top programming languages – particularly for web development and data science projects.

Why is it so popular? Well, in short, because Python is a high-level, dynamic programming language that focuses on rapid and robust development and can be used for projects of practically any size.

Which companies use Python?

It’s a great choice for startups that are working under time pressure but still want to deliver a high-quality solution to the market on time.

It’s also a powerful tool used by multi-billion-dollar corporations and extremely talented developers around the world. Companies like Google, Facebook, and Microsoft use Python for various applications like web applications, data science, AI, machine learning, deep learning, and task automation.

Python has been used to design some of the world’s biggest websites – social media giant Instagram, digital music platform Spotify, and ridesharing company Uber all power their websites with Python.

Quick solutions for programming challenges

Python has been used in development services for almost 30 years, and it is one of the most dynamically growing languages. It’s the first-choice language for a vast majority of students and skilled programmers, particularly for custom web development and app development.

Python developers and data scientists choose Python because it’s versatile, easy to read and understand, and allows for rapid development. Python provides developer-friendly solutions for common programming problems and is compatible with many major platforms and systems.

Moreover, Python is open source. Because of its simplicity, openness, and rich history, Python is extremely popular among developers, and a huge ecosystem has been created around it.

Python has a rich portfolio of frameworks (such as Flask and Django) and libraries (like NumPy and Pandas) that help speed up development. If there’s a problem – the solution probably already exists in Python.

Most of the time, if you need to quickly test your idea, you don’t need to waste too much time on development – just pick up some publicly available modules and build your software like LEGO blocks. It’s all thanks to a large, vibrant Python community that contributes to the language’s growth.

Python has an established position in web development. Universal frameworks like Django and Flask help developers to quickly create secure, fast web, desktop, and mobile applications.

Python is on the rise

In recent times, the programming language owes its even greater popularity to the development of the field of data science, e.g., in the area of artificial intelligence or machine learning. Python is the first choice for AI-first companies. Python performs better in data manipulation and repetitive tasks than other solutions, e.g., R – and it's definitely the right choice if you're planning to build a digital product based on machine learning.

Wrapping up: In which areas is Python development the perfect choice?

Python development is a popular choice for creating custom web, desktop, and mobile apps and is considered a great choice in the areas of:

- Finance and FinTech

- Data Science

- Big Data

- Artificial Intelligence

- Machine Learning and Deep Learning

- Research

- Blockchain

- Modern Software Development

Build your competitive advantage with Netguru's Python-based and other web development services

Choosing Netguru Python development services will enable you to make full use of the knowledge and experience of a number of experts to make your project a success. We are a leading Python development company and have the experience and expertise to build your product from scratch or improve an existing product.

Our team of 650+ specialists (UI/UX designers, developers, illustrators, quality assurance experts, and business analysts) create custom software development solutions for companies of all sizes – from startups to enterprises.

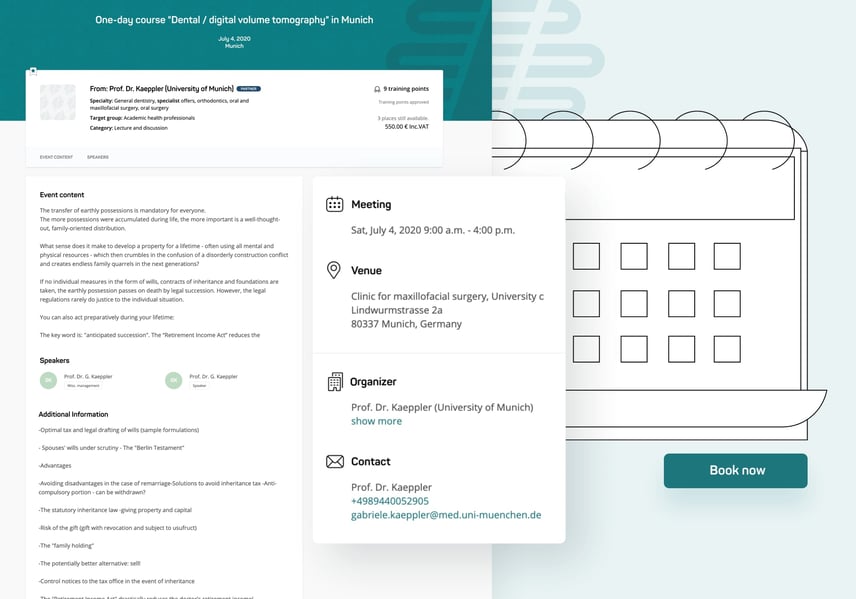

German Healthcare Revived with Digitization

Convincing doctors and other medical personnel to acknowledge the advantage of digital data over paper files might be a tricky mission.

We’ve had the pleasure of collaborating with a corporate startup that is on its way to achieving the goal of digitizing the way doctors expand their knowledge and gain new, critical skills.

The long-term vision for Naontek is to create a digital point of contact for the whole healthcare community in the country. They intend to fill the technological gap in the industry by introducing easily accessible digital products and services.

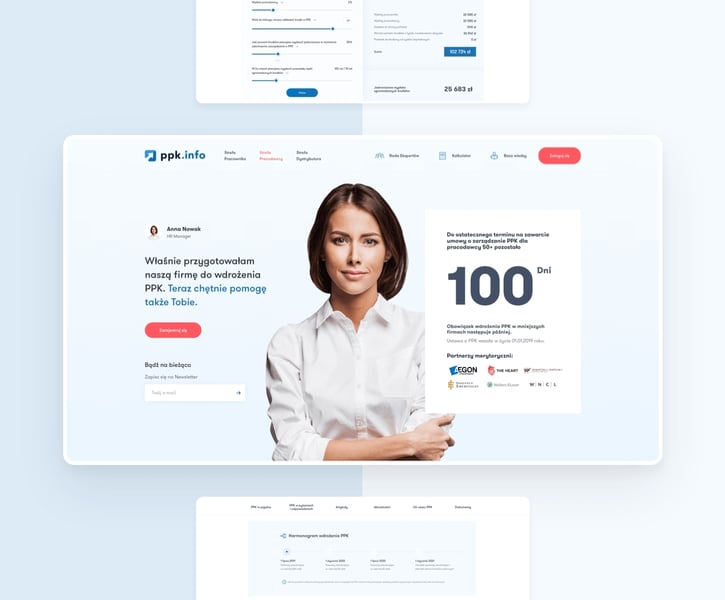

Supporting sales of pensions plans with a web platform

PPK is a long-term savings program implemented by the Polish government. One of Polish financial institutions saw this as an opportunity to build an information service that explains the program in an easy way. This is how the cooperation between Aegon, The Heart, and Netguru started.

The Netguru team started building the platform from scratch. We took the project through all the stages: a Product Design Sprint workshop, design, implementation, and development.

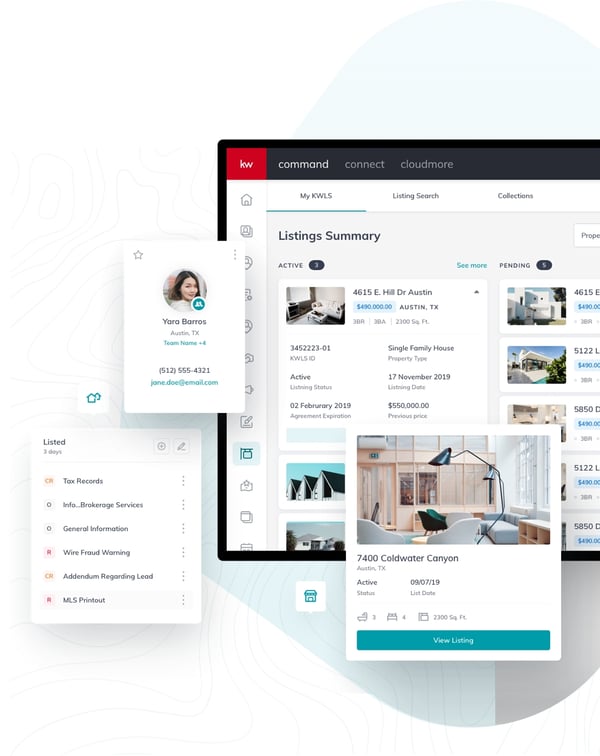

How Keller Williams gained 85,000 active users with an enterprise mobile app

Keller Williams is the world’s largest real estate franchise ranked by agent count (employing more than 170,000 agents). KW decided to reposition itself as a tech company and invested heavily in its own software, the cloud, and AI.

To stay ahead of competitors, Keller Williams have undertaken one of the most ambitious projects in the industry – to leverage their data to boost artificial intelligence-powered technology.

Our partners on working with Netguru

-

Our cooperation with Netguru is a true partnership. Whenever we faced challenges this year, we could rely on Netguru for our urgent staffing needs and time-critical deliverables. The Netguru team has gone above and beyond any expectations of what a strong and reliable partner can be.

Hima Mandali

CTO at Solarisbank -

My experience of working with Netguru was absolutely excellent. Different software teams go through ups and downs, and good software teams are resilient. What makes the Netguru team succeed is being able to ride ups and downs as a team

.jpg?width=65&height=65&name=Adi%20Pavlovic%20Keller%20Williams-s(1).jpg)

Gerardo Bonilla

Product Manager of Moonfare -

What impressed us most was how quickly the Netguru team grasped what it was we wanted to do and were able to make valuable suggestions. The result of the workshop was that we came away with a shared image of what was to be built rather than a large volume of detailed specifications

David Nurser

Helpr Co-founder -

The team was absolutely wonderful to deal with. Their professionalism and dedication have made them our go-to team for any development work.

Asad Naeem

Co-founder & President, Fortuna.ai

Delivered by Netguru

We are actively boosting our international footprint across various industries such as banking, healthcare, real estate, e-commerce, travel, and more. We deliver products to such brands as Solarisbank, IKEA, PAYBACK, DAMAC, Volkswagen, Babbel, Santander, Keller Williams, and Hive.

$5M

Granted in funding for Shine. Self-care mobile app that lets users practice gratitude$28M

Granted in funding for Moonfare. Investment platform that enables investment in private equity funds$20M

Granted in funding for Finiata. Data-driven SME lending platform provider$47M

Granted in funding for Tourlane. Lead generation tool that helps travelers to make bookings

15+

Years on the Market400+

People on Board2500+

Projects Delivered73

Our Current NPS Score

Frequently asked questions about Python development

Read more on our blog

Check out our knowledge base collected and distilled by experienced professionals, and find answers to some of the most important questions on Python development.

Our work was featured on

Curious whether Netguru is the right fit for your project?

Looking for Python development services?

Before we start, we would like to better understand your needs. We'll review your application and schedule a free estimation call.

.jpg?width=1915&height=1041&name=Netguru-Biuro-2018-4838%20(1).jpg)