Speech-to-Text with GPT Sentiment Analysis Use Cases to Upgrade Helpdesk

Yet, despite all this effort, mistakes still happen, and opportunities to enhance service often slip by. Without real-time feedback, making meaningful, on-the-spot changes becomes a challenge—leaving both agents and customers frustrated.

This is where AI steps in. Tools like speech-to-text technology and GPT-powered sentiment analysis eliminate much of the guesswork. These solutions automatically review calls, flag issues, and offer actionable insights, allowing teams to focus on what truly matters: helping customers. Instead of sifting through hours of recordings, supervisors gain access to accurate, real-time evaluations and practical suggestions for improvement. The result? Faster responses, more consistent service, and happier customers.

According to Gartner, by 2025, 80% of customer service organizations will leverage generative AI technology to boost agent productivity and enhance the customer experience (CX). This growing reliance on AI reflects its potential to transform customer support operations.

In this article, we’ll dive into how these AI-powered tools work and how businesses are already using them to transform customer support.

What Are Speech-to-Text and GPT Sentiment Analysis?

At its core, speech-to-text technology does exactly what the name suggests: it converts spoken language into written text. Whether it’s a live conversation or a recorded call, the technology listens, transcribes, and organizes what was said in a format that’s easy to analyze. This automation eliminates the need for manual note-taking or transcription, saving time and ensuring accuracy. Many speech-to-text systems now use LLMs to better handle different accents, speech patterns, and industry-specific terminology.

GPT-powered sentiment analysis goes a step further by interpreting the emotional tone and context of those conversations. It analyzes words, phrases, and even subtle cues to understand whether a customer is satisfied, frustrated, or confused. This real-time emotional insight helps businesses respond more effectively, whether it’s resolving an issue or identifying areas for improvement.

Generative AI tools, like those behind GPT analysis, deliver measurable improvements. For example,57% of companies using these technologies report better customer effort scores, while 56% see higher agent productivity.

Together, these technologies are a powerhouse for improving customer support. Speech-to-text captures the “what” of a conversation, while sentiment analysis interprets the “how.” This combination provides a deeper understanding of customer interactions, enabling businesses to spot trends, refine processes, and create experiences that keep customers coming back.

Together, these technologies are a powerhouse for improving customer support. Speech-to-text captures the “what” of a conversation, while sentiment analysis interprets the “how.” This combination provides a deeper understanding of customer interactions, enabling businesses to spot trends, refine processes, and create experiences that keep customers coming back.

Real-World Use Cases Transforming Helpdesk Operations

Generative AI is increasingly becoming a cornerstone of modern customer support, helping teams streamline workflows and deliver faster responses. In fact, according to HubSpot’s State of AI report, service professionals save more than two hours a day by using generative AI to respond to customers quickly.

Use Case 1: Bluedot Enhancing Netguru Sales Performance

Sales teams often struggle to pinpoint what makes their best calls successful. Manual reviews are time-consuming, and key insights can easily be overlooked. To tackle this, Netguru leverages Bluedot, an AI-powered tool that combines speech-to-text and sentiment analysis to enhance call evaluations and improve sales outcomes. This significantly reduces meeting documentation time—from 1–2 days to just 5–10 minutes—while providing instant access to call summaries and action items.

Here’s how it works: Bluedot records calls directly from platforms like Google Meet or Zoom. These calls are automatically transcribed and synced with HubSpot, eliminating the need for manual note-taking. Beyond transcription, Bluedot analyzes the tone and content of each call, providing summaries that highlight critical moments, such as how objections were handled or how product benefits were communicated.

The results? Sales teams gain instant insights into what works. For example, Bluedot might identify that successful calls emphasize a specific feature or use certain phrasing that resonates with clients. These insights help refine sales scripts and strategies, leading to improved performance across the board.

Additionally LLMs add another layer by offering feedback on communication—highlighting clarity, tone, and key areas for improvement, such as phrasing or objection handling.

Use Case 2: Insurance Helpdesk – PoC by Netguru R&D

Netguru’s R&D team developed a proof-of-concept (PoC) system that leverages speech-to-text technology and GPT analysis to optimize insurance help desk operations.

Here’s how it works: The system transcribes call audio into text, handling even lengthy conversations exceeding 20 minutes. Once transcribed, GPT analyzes the content to assess whether the agent followed company best practices, effectively addressed customer needs, and complied with regulatory guidelines.

The system also generates concise summaries, highlighting key moments and identifying any deviations from standards, such as compliance requirements or internal policies. Supervisors receive real-time feedback on agent performance, making it easier to pinpoint issues and implement corrective actions quickly.

During testing, the PoC successfully analyzed insurance claims calls, delivering detailed compliance reports and practical recommendations to improve agent adherence to regulations and customer service standards.

Use Case 3: Technical Support – Faster Troubleshooting

In technical support, agents must quickly diagnose issues and follow strict troubleshooting protocols. A speech-to-text system paired with GPT analysis could ease this process by transcribing calls, verifying adherence to guidelines, and identifying recurring problems.

For instance, in a banking scenario, customers often call about issues like failed online payments or trouble accessing their accounts. If the system detects a recurring problem—such as login errors following a recent system update—it can flag the issue for escalation to the IT team. Meanwhile, agents can rely on the tool to provide consistent and accurate guidance, such as walking customers through password resets or suggesting alternative authentication methods.

Use Case 4: Retail and Commerce – Improving Customer Interactions

A speech-to-text system with GPT analysis could help monitor customer support calls to ensure agents consistently follow return, exchange, and inquiry-handling policies. The system might pinpoint recurring issues—such as customers misunderstanding refund timelines—and provide actionable insights to improve service protocols and communication.

For example, if multiple calls reveal confusion over return eligibility, businesses could refine their policies and train agents to explain the process more effectively. Additionally, the system could analyze frequent after-sales inquiries, offering proactive solutions like automated follow-up emails with product care tips or shipping updates to address concerns before they arise.

Use Case 5: E-Commerce – Post-Purchase Support

A speech-to-text system with GPT analysis can help e-commerce businesses enhance post-purchase support by ensuring agents consistently handle complaints, returns, and inquiries. By analyzing conversations, the system verifies that agents adhere to standardized processes, such as refund timelines or replacement policies, across all interactions.

For instance, if the system detects inconsistencies in how agents resolve similar complaints, it can flag these discrepancies and recommend targeted training to maintain uniform service quality. Additionally, the system could identify recurring post-purchase issues—like frequent complaints about delayed deliveries—enabling businesses to proactively address underlying problems and improve the customer experience.

Summary of Speech-to-Text with GPT Sentiment Analysis Use Cases

|

Use Case |

Key Challenges |

AI’s Role |

Outcomes |

|

Sales Performance |

Understanding successful sales strategies |

Automates call reviews, analyzes tone and content, highlights key moments |

Refines sales scripts, improves pitch delivery |

|

Insurance Helpdesk |

Ensuring compliance and service quality |

Transcribes and evaluates calls for adherence to regulations |

Provides real-time feedback, improves compliance, and reduces manual review time |

|

Technical Support |

Diagnosing and resolving issues quickly |

Identifies recurring problems, verifies adherence to troubleshooting protocols |

Reduces resolution times, improves operational efficiency |

|

Retail and Commerce |

Consistent handling of customer inquiries |

Monitors adherence to policies and identifies trends in common complaints |

Refines service protocols, improves communication |

|

Post-Purchase |

Maintaining consistency in support quality |

Tracks agent consistency, highlights recurring issues |

Streamlines post-purchase support, resolves complaints faster |

Ready-to-Use Speech-to-Text Workflows: How They Work

Integrating speech-to-text capabilities into helpdesk operations is more efficient with pre-built AI solutions, removing the need to develop custom models. These tools provide APIs and SDKs that allow businesses to incorporate advanced speech recognition and voice processing into their workflows with minimal setup. At Netguru, we often use OpenAI Whisper and Microsoft Azure Speech Services to build custom solutions for our clients.

OpenAI Whisper: Multilingual, Context-Aware Speech Recognition

Whisper is OpenAI’s automatic speech recognition (ASR) model designed for high-accuracy transcription in multiple languages. Unlike traditional speech recognition systems, Whisper is trained on vast multilingual speech data, enabling it to transcribe speech in multiple languages and translate non-English content into English.

It excels in handling complex audio conditions, such as background noise, accents, and overlapping speech. Using a transformer-based neural network, it maps audio waveforms to text sequences while refining predictions in real time.

Source: OpenAI.com

Microsoft Azure Speech Services: Enterprise-Grade Speech-to-Text & Text-to-Speech

Azure AI Speech provides scalable speech-to-text and text-to-speech services that integrate seamlessly into enterprise workflows. Its speech-to-text API uses deep neural networks to transcribe spoken language into structured text, offering features like speaker diarization and automatic punctuation for improved readability.

Source:ai.azure.com

Twilio Speech Recognition: Real-Time AI-Powered Transcription

Twilio provides real-time speech recognition APIs that transcribe voice interactions instantly. Its ASR models process live audio, analyzing phonemes (smallest units of sound) and predicting words with high accuracy. This enables instant transcription, real-time conversation monitoring, and sentiment analysis for customer service teams.

Source: Twilio

Google Cloud Speech-to-Text: Scalable AI-Powered Transcription

Google Cloud provides speech-to-text and text-to-speech services designed for high scalability and real-time applications. Its speech-to-text API supports over 125 languages and is optimized for various industries, including customer service, healthcare, and finance.

Google’s deep learning models offer speaker diarization, automatic punctuation, and domain adaptation, allowing businesses to fine-tune models for industry-specific terminology. It also provides batch processing and real-time streaming, making it effective for both live and recorded customer interactions.

Source:cloud.google.com

Challenges and Limitations of Speech-to-Text with GPT Analysis

While speech-to-text and GPT analysis offer significant benefits, implementing these technologies comes with challenges.

One of the main AI concerns is data privacy and security, as handling customer conversations requires strict compliance with regulations like GDPR or CCPA. Sensitive information, such as payment details or personal identifiers, must be protected during transcription and analysis. Businesses can mitigate risks using encryption and anonymization techniques.

Additionally, the EU AI Act classifies AI systems processing sensitive customer data as high-risk, requiring stricter compliance measures such as transparency, risk assessments, and human oversight. For example, a financial institution might implement end-to-end encryption to safeguard customer account information while still leveraging AI insights from call transcripts.

OpenAI Whisper is highly effective in handling background noise, strong accents, and multilingual support, making it well-suited for call centers and global helpdesk operations. However, accuracy declines when multiple speakers overlap or when the conversation involves technical jargon outside the model’s training data.

Microsoft Azure Speech Services provides enterprise-grade transcription and industry-specific adaptation, but like all ASR models, it can struggle with poor audio quality, specialized terminology, or complex legal and medical contexts. In high-stakes environments where precision is crucial, human oversight is essential to validate and refine transcripts for compliance and accuracy.

Google Cloud Speech-to-Text allows custom model tuning for specific industries like finance, healthcare, and customer service. While this customization improves accuracy for domain-specific language, implementation requires additional data training and continuous fine-tuning.

Despite these capabilities, accuracy limitations remain, especially in challenging environments or conversations with multiple speakers. While AI models continue to improve, human oversight is still necessary in edge cases to validate and refine results where precision is crucial.

Ensuring Accuracy in AI-Powered Transcription

Accuracy is critical in AI transcription for customer-facing roles, as errors can damage trust and cause issues, especially in regulated industries like healthcare. OpenAI’s Whisper, a speech-to-text model used by hospitals through Nabla’s platform, has reportedly processed over 9 million medical conversations for more than 30,000 clinicians and 40 health systems. While Nabla has stated that hallucination errors are rare in their use case, the tool’s limitations are well-documented.

For example, research from Cornell University and the University of Washington found that approximately 1% of transcriptions generated by Whisper contained fabricated content, with 38% of those inaccuracies leading to potential harm or misrepresentation. Such errors were more frequent in conversations with extended pauses, particularly those involving patients with speech disorders like aphasia.

To address these risks, Nabla incorporates a secondary validation process, cross-checking AI-generated transcripts against verified records to ensure only accurate information is used. This highlights the importance of combining automation with human oversight, particularly for businesses adopting AI transcription tools in high-stakes or customer-facing contexts.

Beyond human oversight, additional AI techniques can further enhance transcription accuracy. For example, "word healing" leverages statistical probabilities to correct common grammatical errors—ensuring "I am" is recognized instead of "I is." Generative AI can refine transcripts by structuring sentences more naturally, reducing inconsistencies caused by misinterpretation.

Voice quality also plays a key role in improving transcription results. Noise reduction techniques, such as low-pass filtering, can isolate speech from background noise by prioritizing louder voices. More advanced solutions, like machine learning models designed to "clean" audio files, can further enhance clarity by filtering out unwanted sounds.

By combining AI-driven corrections, noise reduction, and human validation, businesses can reduce hallucinations and improve transcription accuracy.

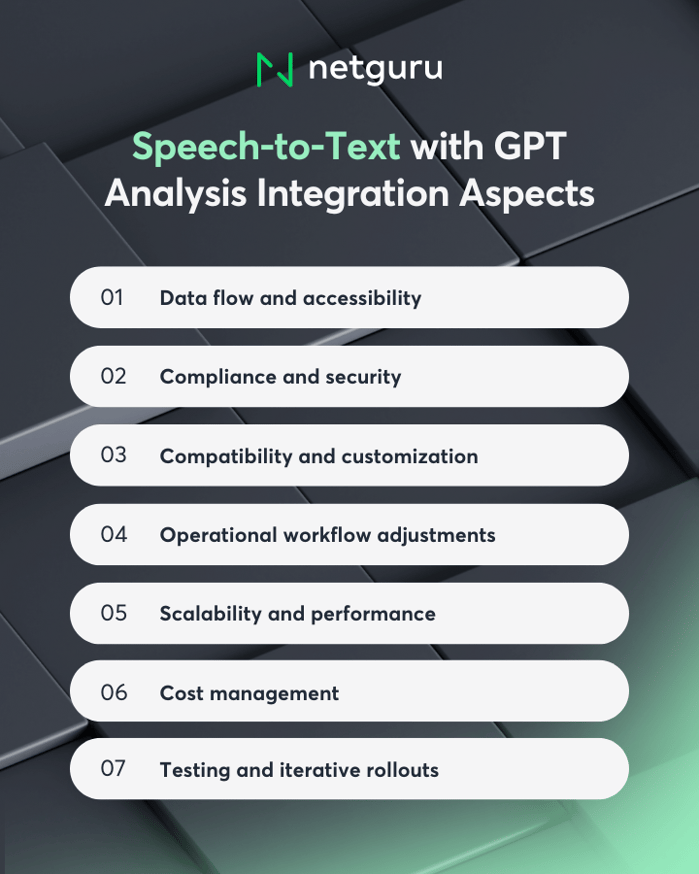

Integration with Existing Tools: Challenges and Considerations

- Data Flow and Accessibility

- Streamlined Data Pipelines: Many contact centers rely on legacy systems or multiple databases (CRM, ticketing tools, analytics platforms). Ensuring that call transcripts and sentiment scores flow seamlessly between systems requires careful planning of data transfer protocols (e.g., APIs, webhooks) and data mapping.

- Data Sync vs. Real-Time Processing: Depending on business needs, data can be processed in batches (post-call) or in near-real-time (during the call). Integrations must accommodate the required speed, which can impact your choice of architecture and technology stack.

- Compliance and Security

- Regulatory Requirements: For industries such as healthcare or finance, integrations must respect standards like GDPR, CCPA, HIPAA, or PCI-DSS. This often entails encryption, anonymization, and careful handling of sensitive transcripts.

- Authentication and Authorization: Using single sign-on (SSO) or role-based access control (RBAC) can protect sensitive customer data. Ensure that each tool in the workflow enforces the same security protocols.

- Audit Trails: Implement logging and audit capabilities to track how data moves through systems, who accesses it, and what changes are made. This is crucial for regulatory audits and internal compliance.

- Compatibility and Customization

- Vendor Lock-In: Some third-party AI or transcription services may limit how easily you can switch providers or add new functionalities. Look for tools offering flexible APIs and broad integration capabilities.

- Custom Vocabulary and Domain-Specific Models: Businesses in specialized domains (e.g., insurance, medicine, manufacturing) may need custom language models to achieve high accuracy. Integrations should support training or uploading custom vocabularies for niche terminology.

- Version Control and Upgrades: AI services frequently update models. Plan for how your integrated system will handle new features, version changes, or API deprecations without disrupting service.

- Operational Workflow Adjustments

- Agent and Supervisor Adoption: Even the best AI integration can fail if end users resist or find the tools cumbersome. Provide training, user-friendly dashboards, and clear onboarding processes to ensure agent and supervisor buy-in.

- UI/UX Consistency: If sentiment scores or transcriptions appear in a CRM, ensure the user interface is intuitive. Align visual elements, language, and navigation with existing tools so users have a unified experience.

- Notifications and Alerts: Determine how and when the system alerts supervisors or agents about potential issues—e.g., non-compliance, negative customer sentiment. Real-time pop-ups vs. daily summary reports can dramatically affect how quickly problems are resolved.

- Scalability and Performance

- Concurrent Call Handling: Large contact centers may handle thousands of calls simultaneously. Integrations should be load-tested to ensure stable performance under peak volumes.

- Cloud Infrastructure vs. On-Premises: Decide if a fully cloud-based solution is viable or if on-premises or hybrid setups are necessary for privacy or compliance. The choice affects cost, scalability, and latency.

- Monitoring and Resilience: Use monitoring tools (e.g., Grafana, Kibana, or built-in dashboards from cloud services) to track latency, error rates, and resource usage. Implement fallback mechanisms, such as queueing or secondary transcription services, to handle outages or spikes.

- Cost Management

- Licensing and Subscription Fees: Speech-to-text and GPT APIs often charge based on usage (minutes of audio, tokens processed, or monthly active agents). Monitor these metrics to prevent unexpected costs.

- Hidden Integration Costs: Beyond API fees, factor in data storage, network egress (if large audio files are transferred), and additional developer time for maintenance and troubleshooting.

- ROI Measurement: Continuously measure time saved in manual call reviews, improvement in compliance, or reductions in handling time. Benchmarking these improvements helps justify ongoing expenses.

- Testing and Iterative Rollouts

- Proof of Concept (PoC) First: Start by integrating a small subset of calls or a single department to validate the solution’s effectiveness and discover pain points early.

- A/B Testing: Compare the AI-enhanced process against the existing workflow to quantify improvements in agent performance, customer satisfaction, or first-call resolution rates.

- Feedback Loops: Gather input from agents, supervisors, and customers. Use their insights to fine-tune integrations, improve model accuracy, and refine the user experience.

How to Get Started with Speech-to-Text + GPT Analysis

Implementing speech-to-text and GPT analysis can transform customer support, but a structured approach is key to success. Here’s how to get started:

- Identify pain points and objectives: Assess your current challenges—such as lengthy call reviews or inconsistent service quality—and define clear goals, like improving call handling or enhancing agent training.

- Choose the right technology stack: Evaluate available tools for speech-to-text transcription and GPT analysis. Look for options that align with your objectives and can integrate with your existing systems.

- Develop and test a proof of concept (PoC): Design a small-scale implementation. Test it in real-world scenarios to evaluate its performance and identify areas for refinement.

- Scale based on feedback and results: Use insights from the PoC to fine-tune the system and roll it out across larger teams or departments. Monitor its impact and continuously improve based on feedback.

Technical Considerations for Implementation

To successfully integrate speech-to-text and GPT-powered analysis, businesses need to plan forscalability, security, and adoption:

- Plan for Architecture – Decide whether to process calls in real-time or batches, as this choice affects integration complexity and system performance.

- Prioritize Security and Compliance – Implement encryption, anonymization, and strict access controls to safeguard sensitive customer data.

- Ensure Scalability – AI transcription and sentiment analysis can be CPU/GPU-intensive. Prepare to scale infrastructure or use cloud-based solutions to handle peak demand.

- Foster User Adoption – Design intuitive interfaces and provide targeted training to encourage employees to effectively use the tools.

- Measure ROI – Track KPIs such as call resolution times, compliance errors, and customer satisfaction to justify costs and drive continuous improvements.

Conclusion: AI as the Future of Customer Support Excellence

AI-powered speech-to-text and GPT analysis are reshaping customer support by automating tasks, providing real-time insights, and enhancing service quality. Whether in sales, technical support, or post-purchase care, these tools help streamline operations, identify key trends, and resolve issues faster.

With solutions like OpenAI Whisper, Microsoft Azure Speech Services, and Google Cloud Speech-to-Text, businesses can develop custom AI-driven solutionswithout building models from scratch. By leveraging existing frameworks and APIs, companies can reduce development costs while adapting AI-powered tools to their specific needs. However, challenges remain—accuracy limitations, compliance requirements, and the need for human oversight must be carefully managed.

By reducing manual workloads and delivering actionable insights, AI enables businesses to respond more efficiently, improve agent training, and strengthen customer relationships. Companies that integrate these technologies gain a competitive edge, optimizing both operational efficiency and customer experience.

Supervised by Patryk Szczygło, R&D Lead at Netguru