Test Automation Strategy: Best Practices & Examples

.jpg?width=1200&height=801&name=Netguru-Biuro-2018-6425%20(1).jpg)

Automation testing is key to improving quality, reducing deployment time, and lowering costs of software development. However, it’s important to avoid the pitfalls of a poor automation approach – an automation strategy based on the best practices is the first step.

Current market trends focus on tightening the feedback loop at all product stages. Automation testing fits that in several ways. How? It reduces time to feedback: Instead of waiting a day or two for regression results from your QA team, you get results in minutes. In turn, you can deploy more often and get user feedback faster.

That being said, automated tests aren't a silver bullet; when used incorrectly, they can lead to wasted resources on tests that provide little payoff. However, creating a product-wide testing strategy – identifying priority flows, the right cases for automation, and avoiding anti-patterns – helps you achieve the best results. Below, we dive into the greatest practices for test automation in more detail.

What’s an automation test strategy?

A software test automation strategy is a lot like a recipe. You don’t start baking a cake without knowing the ingredients you need, the steps to follow, and the result you want to achieve. Likewise, you shouldn’t start automating your test scenarios without a plan.

An automated test strategy should be a part of a bigger, product-wide testing plan. By doing that, you create a unified approach. Just like a regular QA test strategy, an automated software testing strategy defines key focus areas: goal, testing levels, types of testing, tools, scope, and test environment. It’s also where you outline priorities and your general approach to automation.

Types of automated tests

Automation tests are similar to manual tests. The difference isn’t their type or goal, but how they’re executed; in one case, tests are carried out and their results analyzed by a tester, and in another case, these tasks are performed by automation script. That being said, let's go through common classifications.

The two main testing types are:

- Functional automated testing – these tests verify whether an application’s features match the expectations outlined in the requirements. An example of functional testing is checking whether you can share notes with other users.

- Non-functional testing – this focuses on testing all requirements aside from business applications and spans accessibility, performance, security, and usability testing. An example of a non-functional test is checking whether an application can handle high traffic.

The next distinction is based on the type of tests:

- Performance tests – these verify the overall performance of a system by checking response times and stability during the load. There are also subtypes like load and spike tests that focus on specific and challenging scenarios.

- Security tests – here, the application is checked for security issues and vulnerabilities that could be exploited by an attacker, leading to data loss or leaks.

- Accessibility tests– these verify if applications can be accessed by people with different demands – including individuals with vision, hearing, or other impairments – and those using aids like screen readers.

- Usability tests – these focus on testing whether a system is easy to use and intuitive. Usability tests are performed by real users or take place under conditions matching real use; insights and feedback are collected.

- UI tests – these focus on the graphical interface users interact with, including testing on different device types (desktop, tablet, smartphone) and resolutions.

- Smoke tests – here, it’s about checking critical functionalities and paths to make sure the build is stable and further testing can take place.

- Regression tests – these involve running functional and non-functional tests again, ensuring no new issues were introduced to a previously functional application.

There are also different testing levels that are usually presented in the form of a testing pyramid, trophy, honeycomb, or any other testing shape:

- Static – linters, formatters, and type checkers are employed to catch developer typos and code errors.

- Unit – focuses on testing the smallest isolated piece of code, allowing developers to find issues immediately.

- Integration – here, independent modules or components are tested together to make sure they work as expected. The importance of integration tests increases when an application is based on microservices architecture.

- API – focuses on testing API communication, ensuring requests are properly handled and responses meet expectations.

- End-to-end – verifies multiple components at once by interacting with the GUI and testing specified flow paths. This type of testing is the most similar to how an end-user would use the application.

Test automation vs manual testing

With manual testing, a single quality assurance consultant or a team of QAs goes through the testing set one by one, executing each test manually, then verifying and writing down the results. This manual effort has lots of benefits, one of which is feedback.

Manual testing is best-suited for early software development stage UI testing, usability testing, exploratory testing, and ad-hoc testing.

When it comes to QA test automation, you execute previously written scripts, allowing greater precision and speed (automated scripts don’t get tired or miss anything). Automated testing lends itself to regression testing, end-to-end testing, and stable UI test automation.

Below, we delve further into the advantages and disadvantages of test automation and manual testing.

Manual testing pros and cons – table

|

Pros |

Cons |

|

Provides real user feedback |

Slow and time-consuming |

|

Flexible |

Prone to human errors – less accurate |

|

Easy documentation maintenance |

Doesn’t scale well |

|

Low/no up-front investment |

Not reusable |

|

Cost-efficient for rapidly changing or short projects |

May require high expertise |

Feedback

While automated testing only confirms if a feature works, a QA can provide feedback on improvements, changes, and general user experience. That ensures the overall quality of the delivered solution is higher. As such, the role of real feedback shouldn’t be underestimated.

Flexibility

Manual testing allows on-the-fly adaptation to changes, and theQA can successfully execute test scenarios by using their intuition and product knowledge. Meanwhile, automated tests fail until the script is updated to reflect changes.

Documentation maintenance

Documentation is maintained at a low cost and doesn’t require technical expertise or in-depth product knowledge. That means even new QA team members can update test scenarios based on Jira tickets.

Low up-front investment

Manual testing doesn’t require much prior preparation or setup. A QA team can start testing as soon as there’s anything available – including not only the application itself, but also designs and flow charts. Meanwhile, it takes time to create and set up QA test automation tools.

Rapid changes and short projects

When user flows rapidly change, or if the project is short, the payoff to introducing automation in testing framework may be small. The team will likely spend more time setting up, creating, and maintaining automated scripts than it would take them to manually test the application.

QA automation testing pros and cons – table

|

Pros |

Cons |

|

Accurate and reliable |

Test maintenance and creation require some level of expertise |

|

Fast |

Inflexible – changes require a script update |

|

Effectively handles large volumes of tests |

Requires higher up-front investment (for example, script creation) |

|

Reusable |

No user feedback |

|

ROI increases for long-running projects |

Higher entry-level than manual testing |

Accuracy and reliability

QAs are only humans: Change blindness or inattentional blindness may occur – especially when a team is under pressure or repeats scenarios numerous times. Meanwhile, the inflexibility of automated testing is one of their great strengths. Why? Every action is precise. Furthermore, because some frameworks include session recording on failure, it’s easy to track and reproduce issues found by automated testing.

Fast

If you want to release often, reducing testing time is key. While it can take minutes to manually execute scenarios and verify results, it usually takes seconds to perform the same tasks with automated tests. Testing that takes your team hours or days to execute manually reduces massively when automated testing is used.

Large volumes of tests

As the size of your test set increases, the QA team needs to grow to keep execution time at a reasonable level. However, that quickly pushes up the costs. Large test sets are also tiring to execute, increasing the chance of bugs slipping through. With test automation, parallelization is possible, allowing you to execute multiple tests at the same time.

Reusability

With test automation, once your initial setup and configuration is complete, they’re easily reused in the future. Similarly, using good design principles allows you to not only reuse test scripts with little alteration, but also reduces maintenance efforts.

ROI for long-running projects

Automation requires the biggest investment early on, when frameworks need setting up and testing. After that, work focuses on maintenance and adding tests for new features. The longer you can utilize pre-created tests, the better your return on investment (ROI) is.

Based on the above, it’s clear that both test automation and manual testing have benefits and a place in testing strategies. Manual testing is invaluable when you have a changing project that requires flexibility and adaptability, or when usability is a high priority. On the other hand, test automation shines when it comes to accuracy, high volumes of tests, and large long-running projects.

How to build an automation testing strategy

An automation testing strategy should be tailored to the needs of the project; there’s no one-size-fits-all approach. However, there are steps and tips you can use to craft an automation testing strategy.

Step 1: Define the goal and scope

An automation testing strategy requires a clear and measurable goal; otherwise, it’s impossible to know when your intended results are achieved. Once you’ve set a goal, subsequent steps are geared towards reaching it. Your goal may be as simple as automating priority test cases for critical flows.

Defining the scope at this stage helps you avoid wasting time later on. For example, unless you define the scope, automated tests may end up repeating work that's also being done manually. Worse yet, some tests may not be carried out at all, because it wasn’t clear if they should be automated or tested manually. This is a real risk with big QA teams, and the consequences can be severe. With that in mind, define scope precisely.

Step 2: Gather requirements

Brainstorm with stakeholders regarding automation priorities, define goals and KPIs, and write down your testing requirements. This is also the time to outline what types of testing you need to fulfill your requirements. Based on that, you’re able to pick the right tools at another time.

Step 3: Identify risks

At this point, it’s all about identifying and prioritizing areas with the biggest business impact and automating those first. A risk-based testing approach is helpful here. The right prioritization not only allows you to create a logical order for automation, but it also enables you to stop automating at the optimal time. For example, automating low-priority tests creates unnecessary maintenance overheads, they become outdated, and often offer little or no value.

Step 4: Pick test cases for automation

This stage involves outlining specific flows and features that require automation. Firstly, you should focus on high-value business areas, but also consider how stable and complex the flows are. Automating test cases that are likely to change a lot in the current or next sprint is usually wasteful. If you already have test cases, highlight parts to be automated based on your defined goal, risks, and requirements.

Step 5: Define test data and test environment

Environment and test data management is an important but often overlooked step of a test automation plan. GDPR puts severe limitations on the data you can use, so often, the best approach is to use synthetic data. Test data should be stored in external files for easier maintenance; changes to this data shouldn’t affect the test code. Furthermore, the test environment should be stable, and if necessary, testing artifacts should be cleaned up after the testing run is complete.

Step 6: Pick technology and framework

This depends on your project and team. For example, if your team has experience with Python or Java, picking a framework that only supports JavaScript may not be the best idea for your testing process – unless it provides features that are key to achieving your goals.

Therefore, it’s useful to carry out technology and framework research at this stage. Creating a proof of concept (PoC)is also a good idea. That way, you can showcase the PoC to stakeholders and decide whether to move forward with the framework. Overall, it’s important to pick a tool that matches your needs.

Step 7: Track progress

It’s also important to track your work so you know what stage of the testing process you’re at and what still needs to be done. Below are two handy methods:

- Track automation status in your preferred test management tool. Three statuses – planned, automated, and outdated – should be enough, but you can introduce more as needed.

- Create backlog and track work progress in your ticket management system. This makes allocating work easy.

Include tracking information in your strategy, ensuring team members are aware of your chosen method(s).

Step 8: Reporting

Analyzing failed tests and reporting issues are important parts of the automation process. Despite that, it often takes more time than it should to fix underlying issues. Failed tests generally stem from one of four problem areas; a process should be in place to handle each:

- Test environment issue – create a ticket for DevOps.

- Bug in application – create a bug ticket for developers.

- Outdated automation script – create a ticket for the QA team.

- Bug in automation script – create a bug ticket for the QA team.

Step 9: Define maintenance process

Test automation isn’t a single action; it’s a process. Once tests are written, they need to be maintained and the results monitored. The best way to make sure your tests don’t end up outdated and forgotten is to formalize the testing process. Creating a ticket to update outdated scripts is the first step; you also need to define priorities and timelines. Remember: Every outdated test is a potential bug that might end up in the production environment.

Selecting the best automation testing tool

Picking QA test automation tools for your use case can be challenging – especially if you don’t have much experience. Below are some handy tips.

Newer isn’t always better

Chasing after a new or popular tool is tempting, but often, proven and reliable solutions are the better choice. Already established frameworks may not be “trendy”, but they have a bigger community you can reach out to in case of problems.

Your team’s experience

Try to pick a programming language your team has the most experience with. Or, consider collaborating with devs, who may have more expertise in your language of choice.

Costs

There are both free and paid solutions, so you need to estimate the long-term “costs” of going with each option. Paid tools are usually easier to set up and create tests with. However, as the test set grows, costs can quickly spiral out of control, especially when pricing is based on snapshot amounts (usually visual regression tools), run time, or number of tests run.

Similarly, free tools may turn out to be more expensive because of “hidden” costs. For example, although there’s no monetary outlay, you may pay via the time you spend configuring, creating tests, and dealing with issues.

Pugh Matrix

Analyze differences and compare options by using the Pugh Matrix technique. This allows you to filter out important features of each solution and make informed decisions.

Try before committing

If you can’t choose between two to three solutions, try each one out before committing. Automate the same few test cases in each tool, benchmark them against each other, and decide which one’s the best. It’s better to spend a little extra time on proof of concept tests than sink dozens of hours working with frameworks that ultimately don’t meet your expectations.

Consult others

Don’t be afraid to consult with your team members and developers; they aren’t testers, but you’ll be surprised how knowledgeable they are when it comes to testing frameworks. Maybe one of them can recommend a tool that’s just right for your needs.

Tools for automation in testing

There are hundreds of QA testing automation tools available on the market; choosing the one that best suits your requirements can make your head spin. To make it easier, check the following list of tools we often use at Netguru.

Cypress

This is an end-to-end web test automation tool that offers control over network traffic. It offers a few features that make it stand out: You can make assertions on network calls, stub responses to trigger edge cases easily, and modify a function. Due to its popularity, there are many extensions that bring additional features to Cypress, like cypress-axe which allows accessibility testing, or Percy for visual regression testing.

Cypress Dashboard – overview of test run results, source: Cypress

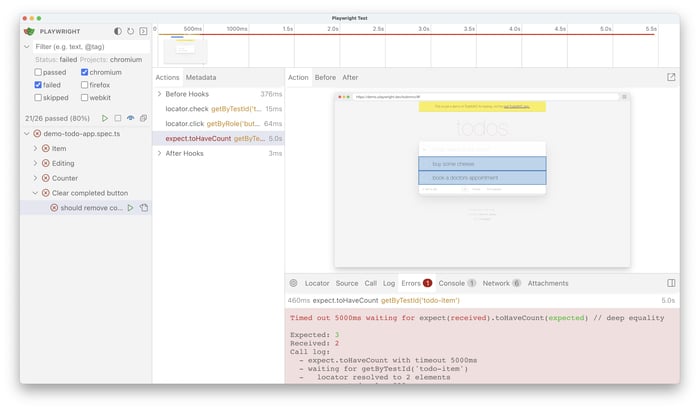

Playwright

Playwright is an open-source tool used for automating tasks in web browsers. Playwright works across different browsers like Chromium, WebKit and Firefox browsers as well as branded browsers such as Google Chrome and Microsoft Edge. It allows you to write tests in various programming languages (JavaScript and TypeScript, Python, Java, .Net). It offers features like headless testing, recording browser interactions, UI test runner and automatic waiting, making web testing more efficient and reliable.

Selenium

Proven and tested Selenium has been on the market for many years and is constantly improving. It provides a lot of flexibility and supports multiple modern browsers plus a variety of programming languages: Ruby, JavaScript, C#, Python, and Java.

Selenium IDE – control flow structure, source:Selenium

Supertest

This library for creating API tests provides high-level abstraction for HTTP testing and allows easy implementation of tests. It offers simplicity, is easy to set up, and is well-documented.

Supertest – assertion example with async/await syntax, source: Github

Pact

Here you have a framework for creating contract tests. Instead of running slower and more expensive integration tests, you can test services in isolation against documentation – a “contract”. The contract includes information about data that should be sent and received, allowing quick verification of the expected response versus the actual state.

Pact Broker – dashboard with a list of published pacts, source:Pact Broker

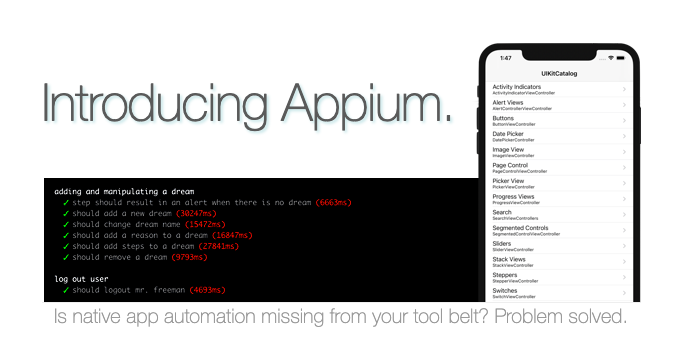

Appium

This is an automation tool for native, mobile, web, and hybrid applications on iOS mobile, Android mobile, and Windows desktop platforms. Since it’s cross-platform, it allows the reuse of code between iOS, Android, and Windows.

Appium – test result example, source: Appium

Optimizing automation in testing

Test automation frameworks and the right automation testing tools supported by a carefully crafted strategy help you keep your release cycle smooth, meaning you can deliver features more frequently and improve product quality. While there’s a lot to consider and there are many tools to mull over, implementing the optimal software testing strategy for you and your business simplifies the software development process.